Archive for January, 2015

Innovation Fortune Cookies

If they made innovation fortune cookies, here’s what would be inside:

If they made innovation fortune cookies, here’s what would be inside:

If you know how it will turn out, you waited too long.

Whether you like it or not, when you start something new uncertainty carries the day.

Don’t define the idealized future state, advance the current state along its lines of evolutionary potential.

Try new things then do more of what worked and less of what didn’t.

Without starting, you never start. Starting is the most important part

Perfection is the enemy of progress, so are experts.

Disruption is the domain of the ignorant and the scared.

Innovation is 90% people and the other half technology.

The best training solves a tough problem with new tools and processes, and the training comes along for the ride.

The only thing slower than going too slowly is going too quickly.

An innovation best practice – have no best practices.

Decisions are always made with judgment, even the good ones.

image credit – Gwen Harlow

Top Innovation Blogger of 2014

Innovation Excellence announced their top innovation bloggers of 2014, and, well, I topped the list!

Innovation Excellence announced their top innovation bloggers of 2014, and, well, I topped the list!

The list is full of talented, innovative thinkers, and I’m proud to be part of such a wonderful group. I’ve read many of their posts and learned a lot. My special congratulations and thanks to: Jeffrey Baumgartner, Ralph Ohr, Paul Hobcraft, Gijs van Wulfen, and Tim Kastelle.

Honors and accolades are good, and should be celebrated. As Rick Hanson knows (Hardwiring Happiness) positive experiences are far less sticky than negative ones, and to be converted into neural structure must be actively savored. Today I celebrate.

Writing a blog post every week is challenge, but it’s worth it. Each week I get to stare at a blank screen and create something from nothing, and each week I’m reminded that it’s difficult. But more importantly I’m reminded that the most important thing is to try. Each week I demonstrate to myself that I can push through my self-generated resistance. Some posts are better than others, but that’s not the point. The point is it’s important to put myself out there.

With innovative work, there are a lot of highs and lows. Celebrating and savoring the highs is important, as long as I remember the lows will come, and though there’s a lot of uncertainty in innovation, I’m certain the lows will find me. And when that happens I want to be ready – ready to let go of the things that don’t go as expected. I expect thinks will go differently than I expect, and that seems to work pretty well.

I think with innovation, the middle way is best – not too high, not too low. But I’m not talking about moderating the goodness of my experiments; I’m talking about moderating my response to them. When things go better than my expectations, I actively hold onto my good feelings until they wane on their own. When things go poorly relative to my expectations, I feel sad for a bit, then let it go. Funny thing is – it’s all relative to my expectations.

I did not expect to be the number one innovation blogger, but that’s how it went. (And I’m thankful.) I don’t expect to be at the top of the list next year, but we’ll see how it goes.

For next year my expectations are to write every week and put my best into every post. We’ll see how it goes.

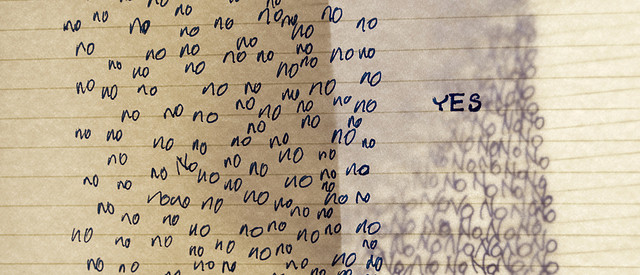

Battle Success With No-To-Yes

Everyone says they want innovation, but they don’t – they want the results of innovation.

Everyone says they want innovation, but they don’t – they want the results of innovation.

Innovation is about bringing to life things that are novel, useful and successful. Novel and useful are nice, but successful pays the bills. Novel means new, and new means fear; useful means customers must find value in the newness we create, and that’s scary. No one likes fear, and, if possible, we’d skip novel and useful altogether, but we cannot. Success isn’t a thing in itself, success is a result of something, and that something is novelty and usefulness.

Companies want success and they want it with as little work and risk as possible, and they do that with a focus on efficiency – do more with less and stock price increases. With efficiency it’s all about getting more out of what you have – don’t buy new machines or tools, get more out of what you have. And to reduce risk it’s all about reducing newness – do more of what you did, and do it more efficiently. We’ve unnaturally mapped success with the same old tricks done in the same old way to do more of the same. And that’s a problem because, eventually, sameness runs out of gas.

Innovation starts with different, but past tense success locks us into future tense sameness. And that’s the rub with success – success breeds sameness and sameness blocks innovation. It’s a strange duality – success is the carrot for innovation and also its deterrent. To manage this strange duality, don’t limit success; limit how much it limits you.

The key to busting out of the shackles of your success is doing more things that are different, and the best way to do that is with no-to-yes.

If your product can’t do something then you change it so it can, that’s no-to-yes. By definition, no-to-yes creates novelty, creates new design space and provides the means to enter (or create) new markets. Here’s how to do it.

Scan all the products in your industry and identify the product that can operate with the smallest inputs. (For example, the cell phone that can run on the smallest battery.) Below this input level there are no products that can function – you’ve identified green field design space which you can have all to yourself. Now, use the industry-low input to create a design constraint. To do this, divide the input by two – this is the no-to-yes threshold. Before you do you the work, your product cannot operate with this small input (no), but after your hard work, it can (yes). By definition the new product will be novel.

Do the same thing for outputs. Scan all the products in your industry to find the smallest output. (For example, the automobile with the smallest engine.) Divide the output by two and this is your no-to-yes threshold. Before you design the new car it does not have an engine smaller than the threshold (no), and after the hard work, it does (yes). By definition, the new car will be novel.

A strange thing happens when inputs and outputs are reduced – it becomes clear existing technologies don’t cut it, and new, smaller, lower cost technologies become viable. The no-to-yes threshold (the constraint) breaks the shackles of success and guides thinking in a new directions.

Once the prototypes are built, the work shifts to finding a market the novel concept can satisfy. The good news is you’re armed with prototypes that do things nothing else can do, and the bad news is your existing customers won’t like the prototypes so you’ll have to seek out new customers. (And, really, that’s not so bad because those new customers are the early adopters of the new market you just created.)

No-to-yes thinking is powerful, and though I described how it’s used with products, it’s equally powerful for services, business models and systems.

If you want innovation (and its results), use no-to-yes thinking to find the limits and work outside them.

To make the right decision, use the right data.

When it’s time for a tough decision, it’s time to use data. The idea is the data removes biases and opinions so the decision is grounded in the fundamentals. But using the right data the right way takes a lot of disciple and care.

When it’s time for a tough decision, it’s time to use data. The idea is the data removes biases and opinions so the decision is grounded in the fundamentals. But using the right data the right way takes a lot of disciple and care.

The most straightforward decision is a decision between two things – an either or – and here’s how it goes.

The first step is to agree on the test protocols and measure systems used to create the data. To eliminate biases, this is done before any testing. The test protocols are the actual procedural steps to run the tests and are revision controlled documents. The measurement systems are also fully defined. This includes the make and model of the machine/hardware, full definition of the fixtures and supporting equipment, and a measurement protocol (the steps to do the measurements).

The next step is to create the charts and graphs used to present the data. (Again, this is done before any testing.) The simplest and best is the bar chart – with one bar for A and one bar for B. But for all formats, the axes are labeled (including units), the test protocol is referenced (with its document number and revision letter), and the title is created. The title defines the type of test, important shared elements of the tested configurations and important input conditions. The title helps make sure the tested configurations are the same in the ways they should be. And to be doubly sure they’re the same, once the graph is populated with the actual test data, a small image of the tested configurations can be added next to each bar.

The configurations under test change over time, and it’s important to maintain linkage between the test data and the tested configuration. This can be accomplished with descriptive titles and formal revision numbers of the test configurations. When you choose design concept A over concept B but unknowingly use data from the wrong revisions it’s still a data-driven decision, it’s just wrong one.

But the most important problem to guard against is a mismatch between the tested configuration and the configuration used to create the cost estimate. To increase profit, test results want to increase and costs wants to decrease, and this natural pressure can create divergence between the tested and costed configurations. Test results predict how the configuration under test will perform in the field. The cost estimate predicts how much the costed configuration will cost. Though there’s strong desire to have the performance of one configuration and the cost of another, things don’t work that way. When you launch you’ll get the performance of AND cost of the configuration you launched. You might as well choose the configuration to launch using performance data and cost as a matched pair.

All this detail may feel like overkill, but it’s not because the consequences of getting it wrong can decimate profitability. Here’s why:

Profit = (price – cost) x volume.

Test results predict goodness, and goodness defines what the customer will pay (price) and how many they’ll buy (volume). And cost is cost. And when it comes to profit, if you make the right decision with the wrong data, the wheels fall off.

Image credit – alabaster crow photographic

Mike Shipulski

Mike Shipulski