Archive for the ‘Product Development’ Category

Is the new one better than the old one?

Successful commercialization of products and services is fueled by one fundamental – making the new one better than the old one. If the new one is better the customer experience is better, the marketing is better, the sales are better and the profits are better.

Successful commercialization of products and services is fueled by one fundamental – making the new one better than the old one. If the new one is better the customer experience is better, the marketing is better, the sales are better and the profits are better.

It’s not enough to know in your heart that the new one is better, there’s got to be objective evidence that demonstrates the improvement. The only way to do that is with testing. There are a number of types testing mechanisms, but whether it’s surveys, interviews or in-the-lab experiments, test results must be quantifiable and repeatable.

The best way I know to determine if the new one is better than the old one is to test both populations with the same test protocol done on the same test setup and measure the results (in a quantified way) using the same measurement system. Sounds easy, but it’s not. The biggest mistake is the confusion between the “same” test conditions and “almost the same” test conditions. If the test protocol is slightly different there’s no way to tell if the difference between new and old is due to goodness of the new design or the badness of the test setup. This type of uncertainty won’t cut it.

You can never be 100% sure that new one is better than the old one, but that’s were statistics come in handy. Without getting deep into the statistics, here’s how it goes. For both population’s test results the mean and standard deviation (spread) are calculated, and taking into consideration the sample size of the test results, the statistical test will tell you if they’re different and confidence of it’s discernment.

The statistical calculations (Student’s t-test) aren’t all that important, what’s important is to understand the implications of the calculations. When there’s a small difference between new and old, the sample size must be large for the statistics to recognize a difference. When the difference between populations is huge, a sample size of one will do nicely. When the spread of the data within a population is large, the statistics need a large sample size or it can’t tell new from old. But when the data is tight, they can see more clearly and need fewer samples to see a difference.

If marketing claims are based on large sample sizes, the difference between new and old is small. (No one uses large sample sizes unless they have to because they’re expensive.) But if in a design review for the new product the sample size is three and the statistical confidence is 95%, new is far better than old. If the average of new is much larger than the average of old and the sample size is large yet the confidence is low, the statistics know the there’s a lot of variability within the populations. (A visual check should show the distributions to more wide than tall.)

The measurement systems used in the experiments can give a good indication of the difference between new and old. If the measurement system is expensive and complicated, likely the difference between new and old is small. Like with large sample sizes, the only time to use an expensive measurement system is when it is needed. And when the difference between new and old is small, the expensive measurement system’s ability accurately and repeatably measure small differences (micrometers vs. meters).

If you need large sample sizes, expensive measurement systems and complicated statistical analyses, the new one isn’t all that different from the old one. And when that’s the case, your new profits will be much like your old ones. But if your naked eye can see the difference with a back-to-back comparison using a sample size of one, you’re on to something.

Image credit – amanda tipton

If you don’t know the critical path, you don’t know very much.

Once you have a project to work on, it’s always a challenge to choose the first task. And once finished with the first task, the next hardest thing is to figure out the next next task.

Once you have a project to work on, it’s always a challenge to choose the first task. And once finished with the first task, the next hardest thing is to figure out the next next task.

Two words to live by: Critical Path.

By definition, the next task to work on is the next task on the critical path. How do you tell if the task is on the critical path? When you are late by one day on a critical path task, the project, as a whole, will finish a day late. If you are late by one day and the project won’t be delayed, the task is not on the critical path and you shouldn’t work on it.

Rule 1: If you can’t work the critical path, don’t work on anything.

Working on a non-critical path task is worse than working on nothing. Working on a non-critical path task is like waiting with perspiration. It’s worse than activity without progress. Resources are consumed on unnecessary tasks and the resulting work creates extra constraints on future work, all in the name of leveraging the work you shouldn’t have done in the first place.

How to spot the critical path? If a similar project has been done before, ask the project manager what the critical path was for that project. Then listen, because that’s the critical path. If your project is similar to a previous project except with some incremental newness, the newness is on the critical path.

Rule 2: Newness, by definition, is on the critical path.

But as the level of newness increases, it’s more difficult for project managers to tell the critical path from work that should wait. If you’re the right project manager, even for projects with significant newness, you are able to feel the critical path in your chest. When you’re the right project manager, you can walk through the cubicles and your body is drawn to the critical path like a divining rod. When you’re the right project manager and someone in another building is late on their critical path task, you somehow unknowingly end up getting a haircut at the same time and offering them the resources they need to get back on track. When you’re the right project manager, the universe notifies you when the critical path has gone critical.

Rule 3: The only way to be the right project manager is to run a lot of projects and read a lot. (I prefer historical fiction and biographies.)

Not all newness is created equal. If the project won’t launch unless the newness is wrestled to the ground, that’s level 5 newness. Stop everything, clear the decks, and get after it until it succumbs to your diligence. If the product won’t sell without the newness, that’s level 5 and you should behave accordingly. If the newness causes the product to cost a bit more than expected, but the project will still sell like nobody’s business, that’s level 2. Launch it and cost reduce it later. If no one will notice if the newness doesn’t make it into the product, that’s level 0 newness. (Actually, it’s not newness at all, it’s unneeded complexity.) Don’t put in the product and don’t bother telling anyone.

Rule 4: The newness you’re afraid of isn’t the newness you should be afraid of.

A good project plan starts with a good understanding of the newness. Then, the right project work is defined to make sure the newness gets the attention it deserves. The problem isn’t the newness you know, the problem is the unknown consequence of newness as it ripples through the commercialization engine. New product functionality gets engineering attention until it’s run to ground. But what if the newness ripples into new materials that can’t be made or new assembly methods that don’t exist? What if the new materials are banned substances? What if your multi-million dollar test stations don’t have the capability to accommodate the new functionality? What if the value proposition is new and your sales team doesn’t know how to sell it? What if the newness requires a new distribution channel you don’t have? What if your service organization doesn’t have the ability to diagnose a failure of the new newness?

Rule 5: The only way to develop the capability to handle newness is to pair a soon-to-be great project manager with an already great project manager.

It may sound like an inefficient way to solve the problem, but pairing the two project managers is a lot more efficient than letting a soon-to-be great project manager crash and burn. After an inexperienced project manager runs a project into the ground, what’s the first thing you do? You bring in a great project manager to get the project back on track and keep them in the saddle until the product launches. Why not assume the wheels will fall off unless you put a pro alongside the high potential talent?

Rule 6: When your best project managers tell you they need resources, give them what they ask for.

If you want to deliver new value to new customs there’s no better way than to develop good project managers. A good project manager instinctively knows the critical path; they know how the work is done; they know to unwind situations that needs to be unwound; they have the personal relationships to get things done when no one else can; because they are trusted, they can get people to bend (and sometimes break) the rules and feel good doing it; and they know what they need to successfully launch the product.

If you don’t know your critical path, you don’t know very much. And if your project managers don’t know the critical path, you should stop what you’re doing, pull hard on the emergency break with both hands and don’t release it until you know they know.

Image credit – Patrick Emerson

Patents are supposed to improve profitability.

Everyone likes patents, but few use them as a business tool.

Everyone likes patents, but few use them as a business tool.

Patents define rights assigned by governments to inventors (really, the companies they work for) where the assignee has the right to exclude others from practicing the concepts described in the patent claims. And patent rights are limited to the countries that grant patents. If you want to get patent rights in a country, you submit your request (application) and run their gauntlet. Patents are a country-by-country business.

Patents are expensive. Small companies struggle to justify the expense of filing a single patent and big companies struggle to justify the expense of their portfolio. All companies want to reduce patent costs.

The patent process starts with invention. Someone must go to the lab and invent something. The invention is documented by the inventor (invention disclosure) and the invention is scored by a cross-functional team to decide if it’s worthy of filing. If deemed worthy, a clearance search is done to see if it’s different from all other patents, all products offered for sale, and all the other literature in the public domain (research papers, publications). Then, then the patent attorneys work their expensive magic to draft a patent application and file it with the government of choice. And when the rejection arrives, the attorneys do some research, address the examiner’s concerns and submit the paperwork.

Once granted, the fun begins. The company must keep watch on the marketplace to make sure no one sells products that use the patented technology. It’s a costly, never-ending battle. If infringement is suspected, the attorneys exchange documents in a cease-and-desist jousting match. If there’s no resolution, it’s time to go to court where prosecution work turns up the burn rate to eleven.

To reduce costs, companies try to reduce the price they pay to outside law firms that draft their patents. It’s a race to the bottom where no one wins. Outside firms get paid less money per patent and the client gets patents that aren’t as good as they could be. It’s a best practice, but it’s not best. Treating patent work as a cost center isn’t right. Patents are a business tool that help companies make money.

Companies are in business to make money and they do that by selling products for more than the cost to make them. They set clear business objectives for growth and define the market-customers to fuel that growth. And the growth is powered by the magic engine of innovation. Innovation creates products/services that are novel, useful and successful and patents protect them. That’s what patents do best and that’s how companies should use them.

If you don’t have a lot of time and you want to understand a patent, read the claims. If you have less time, read the independent claims. Chris Brown, Ph.D.

Patents are all about claims. The claims define how the invention is different (novel) from what’s tin the public domain (prior art). And since innovation starts with different, patents fit nicely within the innovation framework. Instead of trying to reduce patent costs, companies should focus on better claims, because better claims means better patents. Here are some thoughts on what makes for good claims.

Patent claims should capture the novelty of the invention, but sometimes the words are wrong and the claims don’t cover the invention. And when that happens, the patent issues but it does not protect the invention – all the downside with none of the upside. The best way to make sure the claims cover the invention is for the inventor to review the claims before the patent is filed. This makes for a nice closed-loop process.

When a novel technology has the potential to provide useful benefit to a customer, engineers turn those technologies into prototypes and test them in the lab. Since engineers are minimum energy creatures and make prototypes for only the technologies that matter, if the patent claims cover the prototype, those are good claims.

When the prototype is developed into a product that is sold in the market and the novel technology covered by the claims is what makes the product successful, those are good claims.

If you were to remove the patented technology from the product and your customer would notice it instantly and become incensed, those are the best claims.

Instead of reducing the cost of patents, create processes to make sure the right claims are created. Instead of cutting corners, embed your patent attorneys in the technology development process to file patents on the most important, most viable technology. Instead of handing off invention disclosures to an isolated patent team, get them involved in the corporate planning process so they understand the business objectives and operating plans. Get your patent attorneys out in the field and let them talk to customers. That way they’ll know how to spot customer value and write good claims around it.

Patents are an important business tool and should be used that way. Patents should help your company make money. But patents aren’t the right solution to all problems. Patent work can be slow, expensive and uncertain. A more powerful and more certain approach is a strong investment in understanding the market, ritualistic technology development, solid commercialization and a relentless pursuit of speed. And the icing on the top – a slathering of good patent claims to protect the most important bits.

Image credit – Matthais Weinberger

Hands-On or Hands-Off?

Hands-on versus hands-off – as a leader it’s a fundamental choice. And for me the single most important guiding principle is – do what it takes to maintain or strengthen the team’s personal ownership of the work.

Hands-on versus hands-off – as a leader it’s a fundamental choice. And for me the single most important guiding principle is – do what it takes to maintain or strengthen the team’s personal ownership of the work.

If things are going well, keep your hands off. This reinforces the team’s ownership and your trust in them. But it’s not hands-off in and ignore them sense; it’s hands-off in a don’t tell them what to do sense. Walk around, touch base and check in to show interest in the work and avoid interrogation-based methods that undermine your confidence in them. This is not to say a hands-off leader only superficially knows what’s going on, it should only look like the leader has a superficial understanding.

The hands-off approach requires a deep understanding of the work and the people doing it. The hands-off leader must make the time to know the GPS coordinates of the project and then do reconnaissance work to identify the positions of the quagmires and quicksand that lay ahead. The hands-off leader waits patiently just in front of the obstacles and makes no course correction if the team can successfully navigate the gauntlet. But when the team is about to sink to their waists, leader gently nudges so they skirt the dangerous territory.

Unless, of course, the team needs some learning. And in that case, the leader lets the team march it’s project into the mud. If they need just a bit of learning the leader lets them get a little muddy; and if the team needs deep learning, the leader lets them sink to their necks. Either way, the leader is waiting under cover as they approach the impending snafu and is right beside them to pull them out. But to the team, the hands-off leader is not out in front scouting the new territory. To them, the hands-off leader doesn’t pay all that much attention. To the team, it’s just a coincidence the leader happens to attend the project meeting at a pivotal time and they don’t even recognize when the leader subtly plants the idea that lets the team pull themselves out of the mud.

If after three or four near-drowning incidents the team does not learn or change it’s behavior, it’s time for the hands-off approach to look and feel more hands-on. The leader calls a special meeting where the team presents the status of the project and grounds the project in the now. Then, with everyone on the same page the leader facilitates a process where the next bit of work is defined in excruciating detail. What is the next learning objective? What is the test plan? What will be measured? How will it be measured? How will the data be presented? If the tests go as planned, what will you know? What won’t you know? How will you use the knowledge to inform the next experiments? When will we get together to review the test results and your go-forward recommendations?

By intent, this tightening down does not go unnoticed. The next bit of work is well defined and everyone is clear how and when the work will be completed and when the team will report back with the results. The leader reverts back to hands-off until the band gets back together to review the results where it’s back to hands-on. It’s the leader’s judgement on how many rounds of hands-on roulette the team needs, but the fun continues until the team’s behavior changes or the project ends in success.

For me, leadership is always hands-on, but it’s hands-on that looks like hands-off. This way the team gets the right guidance and maintains ownership. And as long as things are going well this is a good way to go. But sometimes the team needs to know you are right there in the trenches with them, and then it’s time for hands-on to look like hands-on. Either way, its vital the team knows they own the project.

There are no schools that teach this. The only way to learn is to jump in with both feet and take an active role in the most important projects.

Image credit – Kerri Lee Smith

To make the right decision, use the right data.

When it’s time for a tough decision, it’s time to use data. The idea is the data removes biases and opinions so the decision is grounded in the fundamentals. But using the right data the right way takes a lot of disciple and care.

When it’s time for a tough decision, it’s time to use data. The idea is the data removes biases and opinions so the decision is grounded in the fundamentals. But using the right data the right way takes a lot of disciple and care.

The most straightforward decision is a decision between two things – an either or – and here’s how it goes.

The first step is to agree on the test protocols and measure systems used to create the data. To eliminate biases, this is done before any testing. The test protocols are the actual procedural steps to run the tests and are revision controlled documents. The measurement systems are also fully defined. This includes the make and model of the machine/hardware, full definition of the fixtures and supporting equipment, and a measurement protocol (the steps to do the measurements).

The next step is to create the charts and graphs used to present the data. (Again, this is done before any testing.) The simplest and best is the bar chart – with one bar for A and one bar for B. But for all formats, the axes are labeled (including units), the test protocol is referenced (with its document number and revision letter), and the title is created. The title defines the type of test, important shared elements of the tested configurations and important input conditions. The title helps make sure the tested configurations are the same in the ways they should be. And to be doubly sure they’re the same, once the graph is populated with the actual test data, a small image of the tested configurations can be added next to each bar.

The configurations under test change over time, and it’s important to maintain linkage between the test data and the tested configuration. This can be accomplished with descriptive titles and formal revision numbers of the test configurations. When you choose design concept A over concept B but unknowingly use data from the wrong revisions it’s still a data-driven decision, it’s just wrong one.

But the most important problem to guard against is a mismatch between the tested configuration and the configuration used to create the cost estimate. To increase profit, test results want to increase and costs wants to decrease, and this natural pressure can create divergence between the tested and costed configurations. Test results predict how the configuration under test will perform in the field. The cost estimate predicts how much the costed configuration will cost. Though there’s strong desire to have the performance of one configuration and the cost of another, things don’t work that way. When you launch you’ll get the performance of AND cost of the configuration you launched. You might as well choose the configuration to launch using performance data and cost as a matched pair.

All this detail may feel like overkill, but it’s not because the consequences of getting it wrong can decimate profitability. Here’s why:

Profit = (price – cost) x volume.

Test results predict goodness, and goodness defines what the customer will pay (price) and how many they’ll buy (volume). And cost is cost. And when it comes to profit, if you make the right decision with the wrong data, the wheels fall off.

Image credit – alabaster crow photographic

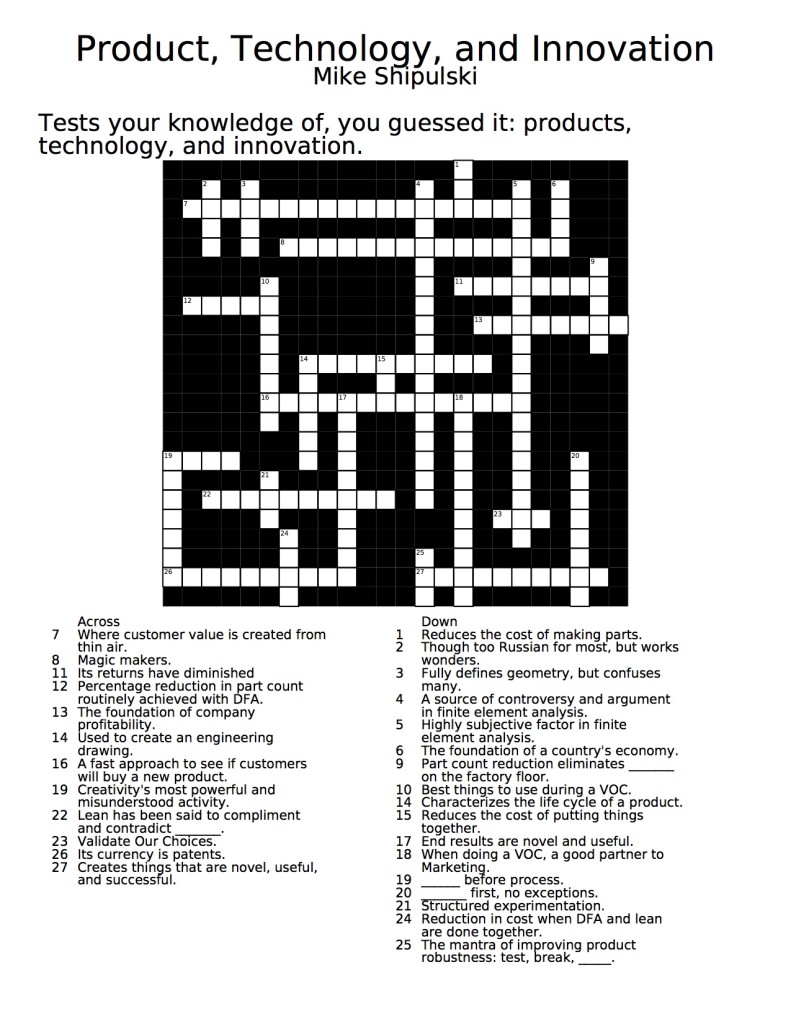

Crossword Puzzle – Product, Technology, Innovation

Here’s something a little different – a crossword puzzle to test your knowledge on products, technology, and innovation. Complete the puzzle using the image below, or download it (and answer key) using the green arrows below, and take your time with it over the Thanksgiving holiday.

[wpdm_file id=5]

[wpdm_file id=4]

The Threshold Of Uncertainty

Our threshold for uncertainty is too low.

Our threshold for uncertainty is too low.

Early in projects, even before the first prototype is up and running, you know what the product must do, what it will cost, and, most problematic, when you’ll be done. Independent of work content, level of newness, and workloads, there’s no uncertainty in your launch date. It’s etched in stone and the consequences are devastating.

A zero tolerance policy on uncertainty forces irrational behavior. As soon as possible, engineering gets something running in the lab, and then doesn’t want to change it because there’s no time. The prototype is almost impossible to build and is hypersensitive to normal process variation, but these issues are not addressed because there’s no time. Everyone agrees it’s important to fix it, and agrees to fix it after launch, but that never happens because the next project is already late before it starts. And the death cycle repeats project after project.

The root cause of this mess is the mistaken porting of manufacturing’s zero uncertainly mindset into design. The thinking goes like this – lean and Six Sigma have achieved magical success in manufacturing by eliminating uncertainty, so let’s do it in product design and achieve similar results. This is a fundamental mistake as the domains are fundamentally different.

In manufacturing the same product is made day-in and day-out – no uncertainty; in product design no two product development efforts are the same and there’s lots of stuff that’s done for the first time – uncertainty by definition. In manufacturing there’s a revision controlled engineering drawing that defines the right answer (the geometry and the material) – make it like the picture and it’s all good; in product design the material is chosen from many candidates and the geometry is created from scratch – the picture is created from nothing. By definition there’s more inherent uncertainty in product design, and to tighten the screws and fix the launch date at the start is inappropriate.

Design engineers must feel like there’s enough time to try new things because new products that provide new functionality require new technologies, new materials, and new geometries. With new comes inherent uncertainty, but there are ways to manage it.

To hold the timeline, give on the specification and cost. Design as fast as you can until you run out of time then launch. The product won’t work as well as you’d like and it will cost more than you’d like, but you’ll hit the schedule. A good way to do this is to de-feature a subassembly to reduce design time, and possibly reduce cost. Or, reuse a proven subassembly to reduce design time – take a hit in cost, but hit the timeline. The general idea – hold schedule but flex on performance and cost.

It feels like sacrilege to admit that something’s got to give, but it’s the truth. You’ve seen how it goes when you edict (in no uncertain terms) that the timeline will be met and there’ll be no give on performance and cost. It hasn’t worked, and it won’t – the inherent uncertainty of product design won’t let it.

Accept the uncertainty; be one with it; and manage it. It’s the only way.

Define To Solve

Countries want their companies to create wealth and jobs, and to do it they want them to design products, make those products within their borders, and sell the products for more than the cost to make them. It’s a simple and sustainable recipe which makes for a highly competitive landscape, and it’s this competition that fuels innovation.

Countries want their companies to create wealth and jobs, and to do it they want them to design products, make those products within their borders, and sell the products for more than the cost to make them. It’s a simple and sustainable recipe which makes for a highly competitive landscape, and it’s this competition that fuels innovation.

When companies do innovation they convert ideas into products which they make (jobs) and sell (wealth). But for innovation, not any old idea will do; innovation is about ideas that create novel and useful functionality. And standing squarely between ideas and commercialization are tough problems that must be solved. Solve them and products do new things (or do them better), become smaller, lighter, or faster, and people buy them (wealth).

But here’s the part to remember – problems are the precursor to innovation.

Before there can be an innovation you must have a problem. Before you develop new materials, you must have problems with your existing ones; before your new products do things better, you must have a problem with today’s; before your products are miniaturized, your existing ones must be too big. But problems aren’t acknowledged for their high station.

There are problems with problems – there’s an atmosphere of negativity around them, and you don’t like to admit you have them. And there’s power in problems – implicit in them are the need for change and consequence for inaction. But problems can be more powerful if you link them tightly and explicitly to innovation. If you do, problem solving becomes a far more popular sport, which, in turn, improves your problem solving ability.

But the best thing you can do to improve your problem solving is to spend more time doing problem definition. But for innovation not any old problem definition will. Innovation requires level 5 problem definition where you take the time to define problems narrowly and deeply, to define them between just two things, to define when and where problems occur, to define them with sketches and cartoons to eliminate words, and to dig for physical mechanisms.

With the deep dive of level 5 you avoid digging in the wrong dirt and solving the wrong problem because it pinpoints the problem in space and time and explicitly calls out its mechanism. Level 5 problem definition doesn’t define the problem, it defines the solution.

It’s not glamorous, it’s not popular, and it’s difficult, but this deep, mechanism-based problem definition, where the problem is confined tightly in space and time, is the most important thing you can do to improve innovation.

With level 5 problem definition you can transform your company’s profitability and your country’s economy. It does not get any more relevant than that.

It’s All Connected

There’s a natural tendency to simplify, to reduce, to narrow. In the name of problem solving, it’s narrow the scope, break it into small bites, and don’t worry about the subtle complexities. And for a lot of situations that works. But after years of fixing things one bite at a time, there are fewer and fewer situations that fit the divide and conquer approach. (Actually, they’re still there, but their return on investment is super low.) And after years of serial discretization, what are left are situations that cannot be broken up, that cut across interfaces, that make up a continuum. What are left are big problems and big situations that have huge payoff if solved, but are interconnected.

There’s a natural tendency to simplify, to reduce, to narrow. In the name of problem solving, it’s narrow the scope, break it into small bites, and don’t worry about the subtle complexities. And for a lot of situations that works. But after years of fixing things one bite at a time, there are fewer and fewer situations that fit the divide and conquer approach. (Actually, they’re still there, but their return on investment is super low.) And after years of serial discretization, what are left are situations that cannot be broken up, that cut across interfaces, that make up a continuum. What are left are big problems and big situations that have huge payoff if solved, but are interconnected.

Whether it’s cross-discipline, cross-organization, cross-cultural, or cross-best practice, the fundamental of these big kahunas is they cross interfaces. And that’s why they’ve never been attacked, and that’s why they’ve never been solved. But with payoffs so big, it’s time to take on connectedness.

For me, the most severe example of connectedness is woven around the product. To commercialize a product there are countless business process that cut across almost every interface. Here are a few: innovation, technology development, product development, robustness testing, product documentation, manufacturing engineering, marketing, sales, and service. Each of these processes is led by one organization and cuts across many; each cut across expertise-specialization interfaces; each requires information and knowledge from the other; and each new product development project must cooperate with all the others. They cannot be separated or broken into bits. Change one with intent and change the others with unintended consequences. No doubt – they’re connected.

Green thinking is much overdue, but with it comes connectedness squared. With pre-green product commercialization, the product flowed to the end user and that was about it. But with environmental movement there’s a whole new return path of interconnected business processes. Green thinking has turned the product life cycle into the circle of life – the product leaves, it lives it’s life, and it always comes back home.

And with this return path of connectedness, how the product goes together in manufacturing must be defined in conjunction with how it will be disassembled and recycled. Stress analysis must be coordinated with packaging design, regulations of banned substances, and material reuse of retired product. Marketing literature must be co-produced with regulatory strategy and recycling technologies. It’s connected more than ever.

But the bad news is the good news. Yes, things are more interwoven and the spider web is more tangled. But the upside – companies that can manage the complexity will have a significant advantage. Those that can navigate within connectedness will win.

The first step is to admit there’s a problem, and before connectedness can be managed, it must be recognized. And before it can become competitive advantage, it must be embraced.

Product Thinking

Product costs, without product thinking, drop 2% per year. With product thinking, product costs fall by 50%, and while your competitors’ profit margins drift downward, yours are too high to track by conventional methods. And your company is known for unending increases in stock price and long term investment in all the things that secure the future.

The supply chain, without product thinking, improves 3% per year. With product thinking, longest lead processes are eliminated, poorest yield processes are a thing of the past, problem suppliers are gone, and your distributers associate your brand with uninterrupted supply and on time delivery.

Product robustness, without product thinking, is the same year-on-year. Re-injecting long forgotten product thinking to simplify the product, product robustness jumps to unattainable levels and warranty costs plummet. And your brand is known for products that simply don’t break.

Rolled throughput yield is stalled at 90%. With product thinking, the product is simplified, opportunities for defects are reduced, and throughput skyrockets due to improved RTY. And your brand is known as a good value – providing good, repeatable functionality at a good price.

Lean, without product thinking has delivered wonderful results, but the low hanging fruit is gone and lean is moving into the back office. With product thinking, the design is changed and value-added work is eliminated along with its associated non-value added work (which is about 8 times bigger); manufacturing monuments with their long changeover times are ripped out and sold to your competitors; work from two factories is consolidated into one; new work is taken on to fill the emptied factories; and profit per square foot triples. And your brand is known for best-in-class quality, unbeatable on time delivery, world class performance, and pioneering the next generation of lean.

The sales argument is low price and good payment terms. With product thinking, the argument starts with product performance and ends with product reliability. The sales team is energized, and your brand is linked with solid products that just plain work.

The marketing approach is stickers and new packaging. With product thinking, it’s based on competitive advantage explained in terms of head-to-head performance data and a richer feature set. And your brand stands for winning technology and killer products.

Product thinking isn’t for everyone. But for those that try – your brand will thank you.

Engineering Will Carry the Day

Engineering is more important than manufacturing – without engineering there is nothing to make, and engineering is more important than marketing – without it there is nothing to market.

Engineering is more important than manufacturing – without engineering there is nothing to make, and engineering is more important than marketing – without it there is nothing to market.

If I could choose my competitive advantage, it would be an unreasonably strong engineering team.

Ideas have no value unless they’re morphed into winning products, and that’s what engineering does. Technology has no value unless it’s twisted into killer products. Guess who does that?

We have fully built out methodologies for marketing, finance, and general management, each with all the necessary logic and matching toolsets, and manufacturing has lean. But there is no such thing for engineering. Stress analysis or thermal modeling? Built a prototype or do more thinking? Plastic or aluminum? Use an existing technology or invent a new one? What new technology should be invented? Launch the new product as it stands or improve product robustness? How is product robustness improved? Will the new product meet the specification? How will you know? Will it hit the cost target? Will it be manufacturable? Good luck scripting all that.

A comprehensive, step-by-step program for engineering is not possible.

Lean says process drives process, but that’s not right. The product dictates to the factory, and engineers dictate the product. The factory looks as it does because the product demands it, and the product looks as it does because engineers said so.

I’d rather have a product that is difficult to make but works great rather than one that jumps together but works poorly.

And what of innovation? The rhetoric says everyone innovates, but that’s just a nice story that helps everyone feel good. Some innovations are more equal than others. The most important innovations create the killer products, and the most important innovators are the ones that create them – the engineers.

Engineering as a cost center is a race to the bottom; engineering as a market creator will set you free.

The only question: How are you going to create a magical engineering team that changes the game?

Mike Shipulski

Mike Shipulski